The concept of Thinking Machine is not new, and beyond some mythologies we can say that it all began, albeit very quietly, in the 17th century. Of course at that time there was not the speed of news dissemination that we have today, and developments were slow partly because some fundamental discoveries, such as trivially that of electricity, were still missing. We have to wait 3 century to listen the words Artificial Intelligence. I’ll tell it you in a little while. And only the last two years we have had a free access to an AI, the famous ChatGPT of OpenAI, and then others have been following, like Gemini, Llama, Claude. Let me be more precise: these aren’t AI, properly, but LLM, Large Language Model, but all of us call them AI.

The Thinking Machine starts

In 1623 Wilhelm Schickard, a German scientist, built the first mechanical machine capable of performing mathematical calculations with numbers up to six digits, though not independently. In 1642 Blaise Pascal built a machine that did mathematical operations with carryover. In 1674 Leibniz perfected a machine for addition and multiplication. Between 1834 and 1837 Charles Babbage designed the Analytical Machine with features we might call similar to modern calculators but that project was never realized. Most famous Babbage’s collaborator was Ada Lovelace, Lord Byron’s daughter, who developed some algorithms to instruct Babbage’s machine, such as the one to compute Bernulli numbers, a rather important numerical sequence in the analysis and study of polynomials. Ada Lovelace is considered the first programmer, among both men and women. Her name, Ada, was given to one of the first programming languages in the 1970s and used by the U.S. Department of Defense. She studied the binary algebra, invented by George Boole.

These are the first famous names of AI’story: Blaise Pascal, Gottfried Leibnitz, Charles Babbage, George Boole and Ada Lovelace.

In 1937 Claude Shannon, another of the Greats, at Yale University showed how Boolean algebra and binary operations, mathematics based only on the symbols 0 and 1, could represent the circuits within telephones and the logic of telephone transmissions. Shannon is also the author of one of the most famous and widely used theorems used in information theory.

An important step in the AI’s story was the Alan Turing’s article in 1936, titled On Computable Numbers, With An Application To The Entscheidungsproblem which lays the foundation for concepts such as computability.

Alan Turing, the genious of AI

The Turing’s Machine born just since this article and the invention of the famous Turing’s test, to decide whether we are talking to a human being or a machine. According to the test, a machine can be considered intelligent if its behavior, observed by a human being, was considered indistinguishable from that of a person. If it reminds you of the Voight-Kampff test to which Deckart subjects Rachel, well it does. If you don’t know what I’m talking about, go in a hurry and see Blade Runner.

In 1943, the first system using a mathematical model of a neuron, which can be turned on or off, was created. This was the beginning of studies on neural networks, and the two scientists who proposed it were neurophysiologist Warren McCulloch and mathematician Walter Pitts, who demonstrated that a logic circuit could be represented with a set of neurons and also vice versa, that is, it could replace neurons in a network with logic patterns. A system built with this scheme of logic circuits then would function like the human brain, and would learn from its experience, or rather, from the data it could retrieve and was given. This approach was called evolutionist, because precisely the machine could evolve through its study and correction of any errors.

In 1956 there was a conference at Darmouth College where the top experts in neural networks, computational logic, and in short the scientists of the new discipline, cybernetics, were present. There a program called LP was presented that was very much like human reasoning, because it was able to prove many theorems from the principles of mathematics. This is an opposite method to the evolutionist method, a top-down learning model, which produced an artificial intelligence called symbolic, because precisely it was based on symbols and rules.

AI evolves itself as a living being. Or doesn’t?

This means that the machine must be given the rules of a system, such as musical harmony, and then it would create a song on its own. Incidentally, this is the scheme by which Deep Blue, the IBM supercomputer that in 1996 was the first computer to win a chess match played against champion Gerry Kasparov was built.

In the evolutionist model, on the other hand, a bottom-up model, the machine is made to listen to thousands of songs and musical works, from which the machine would then understand the rules of harmony and melody, the sound of notes, and then create its own song.

It was at this conference that John McCarthy, one of the originators of the conference and winner of the Turing Prize in 1971, introduced the expression Artificial Intelligence.

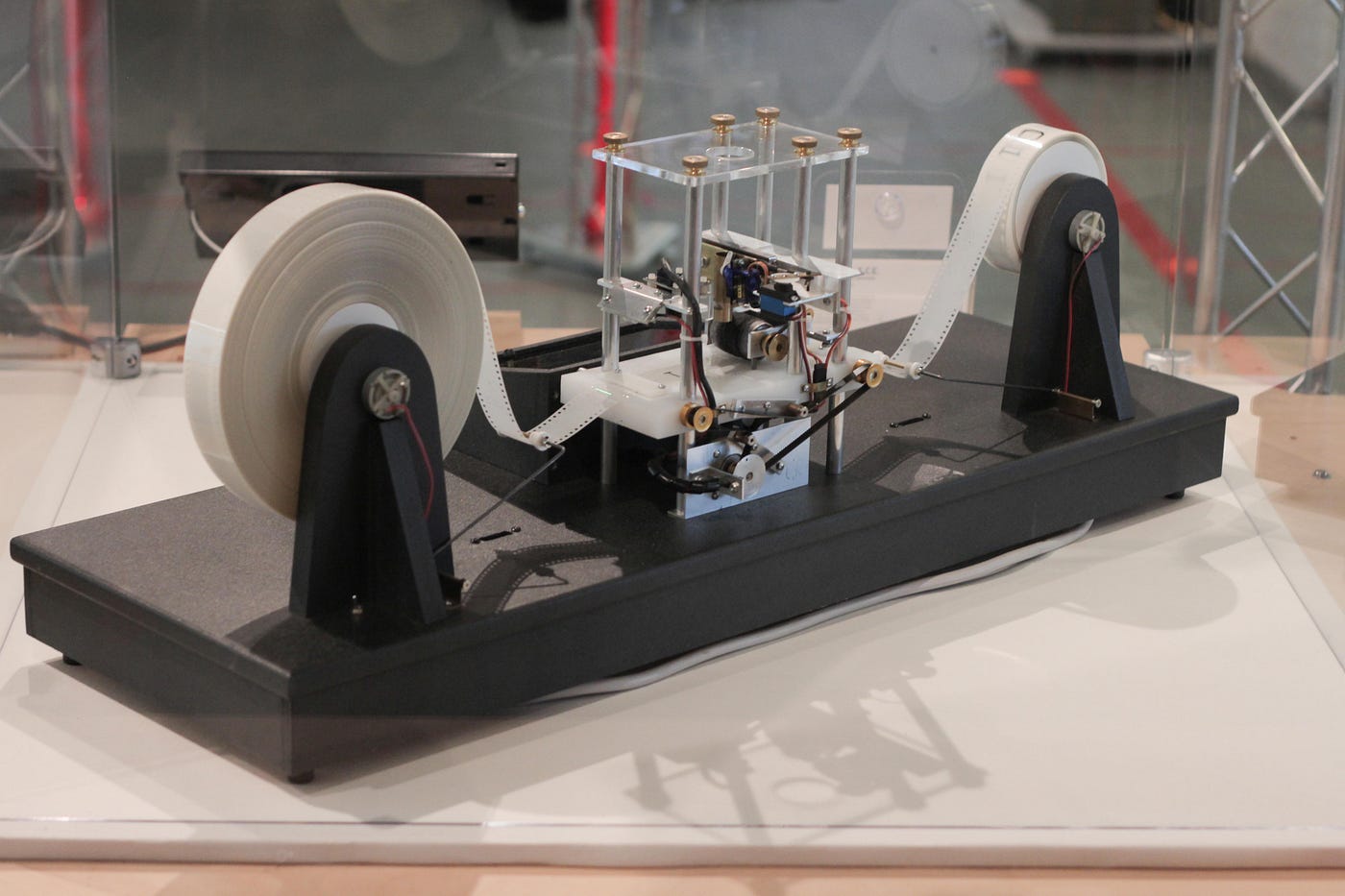

In 1957 Frank Rosenblatt built the first machine containing a neural network called Perceptron and was funded by the U.S. Office of Naval Research and the U.S. Air Force Air Development Center. The latter is located in the city of Rome, not that one in Italy but in the State of New York; there is also a branch in Verona, the Italian original one. The first demonstration of the Perceptron was in 1960, and it followed the evolutionist approach of McCulloch and Pitts.

When this prototype was presented, the New York Times came out with articles between enthusiastic and concerned, describing this machine as being able to learn on its own and create its own consciousness. Concerns about thinking machines thus do not arise now, but at least 65 years ago. Perceptron and its design introduced something that today is the basis of every Artificial Intelligence model building, namely Deep Learning, with a human-supervised data entry model. Don’t you think about a modern computer, but an huge machine full of cables, contacts, relays and even gears, weighing 8 tons, had been built to simulate the effect of just 8 neurons. Despite the great potential and funding from the U.S. government, when Rosenblatt gave a demonstration of his Perceptron, it did not make a great impression, because the most it could do was to distinguish right from left. Investors couldn’t wait 60 years for Rosenblatt’s artificial machine intelligence to become adult, and so efforts turned to top-down learning models: you teach the rules, of math, chess, photography, and then the machine creates its own products.

Today, on the other hand, the approach to artificial intelligence is exactly the Rosenblatt one, which is to provide the data, lots of data and informations to the machine, and let it discover for itself the rules by which it is correlated. Nowadays the modern approach to AI is the Pretraining, the letter P of GPT. The model generally used with which new AIs are built is therefore a bottom-up model, an evolutionary model: you provide huge amounts of data and thanks to machine learning models you teach the AI the difference, for example, between a cat and a dog, or between a person and a tree. This is the meaning of LLM, Large Language Models: large data set and a model to put them together. All our information we have put on the web, post, photos, videos, are used from AI to its (or her? or his?) Pretraining session.

So, as you read until here, the AI is a concept born 3 century ago, together all the ethical questions AI creates.

I mean, has AI nightmares?